-

IT and Data Center Certification-

Data Center Design and Architecture-

Evaluating the Integration of Edge Computing in Data Center Architecture

We provide comprehensive solutions designed to help our clients mitigate risks, enhance performance, and excel in key areas such as quality, health & safety, environmental sustainability, and social responsibility.

Discover

For many years, our organization has been operating successfully, boasting modern laboratories that meet international standards. These laboratories are equipped with the latest technology devices and equipment, and we have built a strong team of experienced and trained personnel to operate them.

DiscoverWelcome to Eurolab, your partner in pioneering solutions that encompass every facet of life. We are committed to delivering comprehensive Assurance, Testing, Inspection, and Certification services, empowering our global clientele with the ultimate confidence in their products and processes.

Discover

-

IT and Data Center Certification-

Data Center Design and Architecture-

Evaluating the Integration of Edge Computing in Data Center ArchitectureEvaluating the Integration of Edge Computing in Data Center Architecture

The rapid growth of IoT devices, cloud computing, and big data has led to a significant increase in data generation and processing requirements. As a result, traditional centralized data centers are facing scalability and latency challenges. To address these issues, edge computing has emerged as a viable solution for reducing latency and improving application performance. In this article, we will discuss the integration of edge computing in data center architecture, its benefits, and best practices.

Benefits of Edge Computing

Edge computing brings several advantages to data centers:

Reduced Latency: By processing data closer to the source, edge computing reduces the distance that data needs to travel to reach the central location. This results in lower latency and improved application performance.

Improved Real-time Processing: Edge computing enables real-time processing of data, which is essential for applications such as IoT sensor monitoring, video analytics, and autonomous vehicles.

Increased Bandwidth Efficiency: By offloading computations from the core network to the edge devices, edge computing reduces bandwidth consumption and decreases network congestion.

Best Practices for Integrating Edge Computing in Data Center Architecture

When integrating edge computing into data center architecture, consider the following best practices:

Hybrid Cloud Model: Implement a hybrid cloud model that combines on-premises infrastructure with public or private cloud services. This enables organizations to scale their resources as needed and improve flexibility.

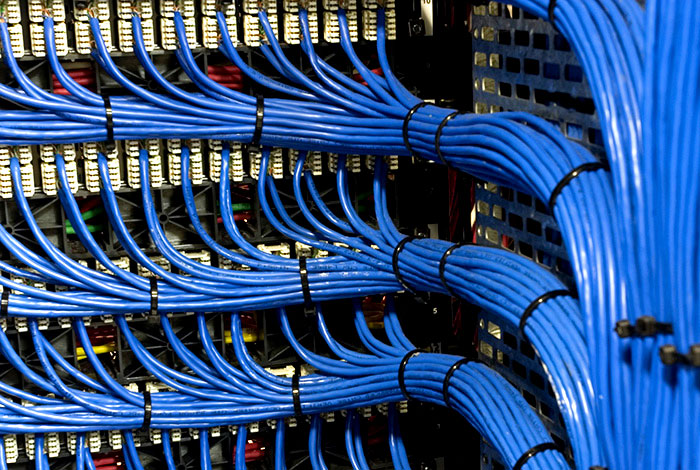

Edge Devices Placement: Strategically place edge devices in locations where they can access relevant data sources, such as near IoT sensors or video cameras. Ensure that these devices are properly secured and managed.

Data Replication and Synchronization: Implement a data replication and synchronization mechanism to ensure that data is consistent across the network. This includes replicating data from edge devices to central locations and vice versa.

Monitoring and Management: Develop an integrated monitoring and management system for edge computing devices, which should include real-time monitoring, performance metrics, and alerting capabilities.

Considerations When Evaluating Edge Computing Integration

When evaluating the integration of edge computing in data center architecture, consider the following factors:

Cost and ROI: Assess the cost savings and return on investment (ROI) associated with implementing edge computing. Consider the costs of hardware, software, personnel, and maintenance.

Scalability and Flexibility: Evaluate the scalability and flexibility of your infrastructure to accommodate growing workloads and changing application requirements.

Security and Compliance: Ensure that your data center architecture meets security and compliance requirements for sensitive data processing.

Skills and Training: Assess the skills and training needs of personnel involved in managing edge computing devices.

Best Practices for Edge Computing Infrastructure Design

When designing an edge computing infrastructure, consider the following best practices:

Modular Architecture: Implement a modular architecture that allows for easy upgrades, replacements, or additions of components.

Redundancy and Failover: Ensure redundancy and failover capabilities to minimize downtime and data loss in case of hardware failures.

Power and Cooling Requirements: Consider power and cooling requirements for edge devices and ensure they are properly addressed.

Data Center Design: Design the data center with scalability, flexibility, and efficiency in mind.

QA

What is Edge Computing?

Edge computing refers to processing data closer to where its generated or consumed, rather than sending it to a centralized location. This approach reduces latency, improves application performance, and increases bandwidth efficiency.

How does Edge Computing differ from Cloud Computing?

While both edge computing and cloud computing involve distributed computing architectures, the main difference lies in their deployment models and data processing locations. Edge computing involves processing data at the edge of the network (near the source), whereas cloud computing processes data in a centralized location (data center or cloud).

What are the Benefits of Edge Computing for IoT Devices?

Edge computing benefits IoT devices by reducing latency, improving real-time processing capabilities, and increasing bandwidth efficiency. By processing data locally on IoT devices, edge computing enables applications such as predictive maintenance, real-time monitoring, and autonomous decision-making.

How do I select the right Edge Computing Device for my Organization?

When selecting an edge computing device, consider factors such as performance requirements, power consumption, cooling needs, and scalability. Evaluate the compatibility of the device with your existing infrastructure and assess its integration with other systems and applications.

What are some Common Challenges Associated with Edge Computing Integration?

Common challenges associated with edge computing integration include:

IT and Data Center Certification

IT and Data Center Certification: Understanding the Importance and Benefits The field of Informatio...

Environmental Impact Assessment

Environmental Impact Assessment: A Comprehensive Guide Environmental Impact Assessment (EIA) is a c...

Fire Safety and Prevention Standards

Fire Safety and Prevention Standards: Protecting Lives and Property Fire safety and prevention stan...

MDR Testing and Compliance

MDR Testing and Compliance: A Comprehensive Guide The Medical Device Regulation (MDR) is a comprehe...

Consumer Product Safety

Consumer Product Safety: Protecting Consumers from Harmful Products As a consumer, you have the rig...

Lighting and Optical Device Testing

Lighting and Optical Device Testing: Ensuring Performance and Safety Lighting and optical devices a...

Military Equipment Standards

Military Equipment Standards: Ensuring Effectiveness and Safety The use of military equipment is a ...

Construction and Engineering Compliance

Construction and Engineering Compliance: Ensuring Safety, Quality, and Regulatory Adherence In the ...

Agricultural Equipment Certification

Agricultural equipment certification is a process that ensures agricultural machinery meets specific...

Pharmaceutical Compliance

Pharmaceutical compliance refers to the adherence of pharmaceutical companies and organizations to l...

Environmental Simulation Testing

Environmental Simulation Testing: A Comprehensive Guide In todays world, where technology is rapidl...

Chemical Safety and Certification

Chemical safety and certification are critical in ensuring the safe management of products and proce...

Aviation and Aerospace Testing

Aviation and Aerospace Testing: Ensuring Safety and Efficiency The aviation and aerospace industr...

Electrical and Electromagnetic Testing

Electrical and Electromagnetic Testing: A Comprehensive Guide Introduction Electrical and electrom...

Food Safety and Testing

Food Safety and Testing: Ensuring the Quality of Our Food As consumers, we expect our food to be sa...

Battery Testing and Safety

Battery Testing and Safety: A Comprehensive Guide As technology continues to advance, battery-power...

Trade and Government Regulations

Trade and government regulations play a vital role in shaping the global economy. These regulations ...

NEBS and Telecommunication Standards

Network Equipment Building System (NEBS) and Telecommunication Standards The Network Equipment Bu...

Product and Retail Standards

Product and Retail Standards: Ensuring Quality and Safety for Consumers In todays competitive marke...

Renewable Energy Testing and Standards

Renewable Energy Testing and Standards: Ensuring a Sustainable Future The world is rapidly transiti...

Hospitality and Tourism Certification

Hospitality and Tourism Certification: Unlocking Opportunities in the Industry The hospitality and ...

Energy and Sustainability Standards

In today’s rapidly evolving world, businesses face increasing pressure to meet global energy a...

Transportation and Logistics Certification

Transportation and Logistics Certification: A Comprehensive Guide The transportation and logistics ...

Pressure Vessels and Installations Testing

Pressure Vessels and Installations Testing Pressure vessels are a critical component of various ind...

Healthcare and Medical Devices

The Evolution of Healthcare and Medical Devices: Trends, Innovations, and Challenges The healthcare...

Railway Industry Compliance

Railway Industry Compliance: Ensuring Safety and Efficiency The railway industry is a critical comp...

Industrial Equipment Certification

Industrial equipment certification is a critical process that ensures industrial equipment meets spe...

Automotive Compliance and Certification

Automotive Compliance and Certification: Ensuring Safety and Efficiency The automotive industry is ...

Electromechanical Safety Certification

Electromechanical Safety Certification: Ensuring Compliance and Protecting Lives In todays intercon...

Cosmetic Product Testing

The Complex World of Cosmetic Product Testing The cosmetics industry is a multi-billion-dollar ma...