-

Military Equipment Standards-

Military Technology and Innovation-

Standards for Military Artificial Intelligence Systems

We provide comprehensive solutions designed to help our clients mitigate risks, enhance performance, and excel in key areas such as quality, health & safety, environmental sustainability, and social responsibility.

Discover

For many years, our organization has been operating successfully, boasting modern laboratories that meet international standards. These laboratories are equipped with the latest technology devices and equipment, and we have built a strong team of experienced and trained personnel to operate them.

DiscoverWelcome to Eurolab, your partner in pioneering solutions that encompass every facet of life. We are committed to delivering comprehensive Assurance, Testing, Inspection, and Certification services, empowering our global clientele with the ultimate confidence in their products and processes.

Discover

-

Military Equipment Standards-

Military Technology and Innovation-

Standards for Military Artificial Intelligence SystemsStandards for Military Artificial Intelligence Systems: Ensuring Safety, Effectiveness, and Ethics

Artificial intelligence (AI) has become a critical component of modern military operations, enhancing capabilities in areas such as surveillance, reconnaissance, communication, and decision-making. However, the increasing use of AI in military systems also raises significant concerns regarding safety, effectiveness, and ethics. To address these issues, various organizations, governments, and industries have established standards for military AI systems.

The Need for Standards

Standards for military AI systems are essential to ensure that these technologies are developed, deployed, and used responsibly. The rapid development of AI has outpaced the ability of regulatory frameworks to keep pace, creating a pressing need for standardized guidelines and protocols. Without such standards, the risk of AI system failures, cyber attacks, or unintended consequences could lead to catastrophic outcomes.

Key Considerations in Establishing Standards

Standards for military AI systems must address several critical considerations:

Autonomy and Decision-Making: Military AI systems often involve autonomous decision-making capabilities, which can be complex and high-risk. Standards should define the limits of autonomy, ensuring that human oversight and control are maintained.

Data Quality and Integrity: AI systems rely on accurate and reliable data to make decisions. Standards must address issues related to data quality, including data sources, accuracy, completeness, and integrity. This includes measures for detecting and mitigating biases in training data.

Standards Development

Several organizations have initiated efforts to develop standards for military AI systems:

National Defense Authorization Act (NDAA): The 2019 NDAA mandated the Secretary of Defense to establish guidelines for the development, deployment, and use of autonomous and unmanned systems. This effort has led to the creation of a new Office of Autonomous Systems within the Department of Defense.

International Organization for Standardization (ISO): ISO is developing standards for AI and machine learning in various sectors, including defense. Their efforts focus on ensuring interoperability, security, and safety.

Standards for Military AI Systems

Several key areas require standardized guidelines:

1. Algorithmic Transparency: Standards should ensure that the decision-making processes of military AI systems are transparent and explainable.

2. Bias and Fairness: Measures must be taken to prevent biases in training data and algorithmic decision-making.

3. Human Oversight and Control: Protocols should define the limits of autonomy, ensuring human oversight and control over AI system decisions.

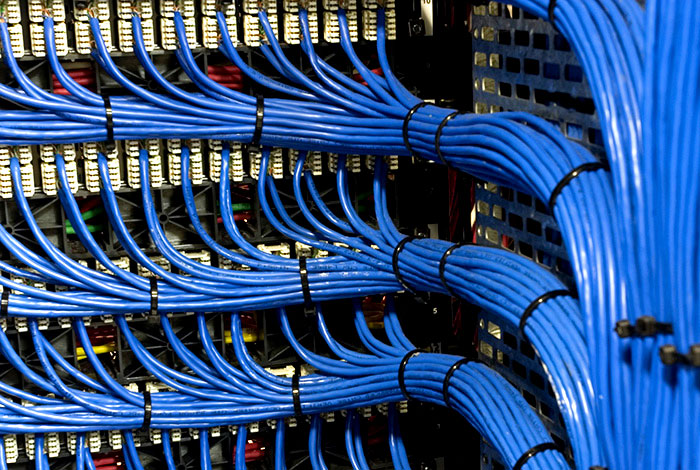

Standards for Cybersecurity

Cybersecurity is a critical aspect of military AI systems:

1. Data Protection: Standards must ensure the secure storage and transmission of sensitive data.

2. Authentication and Authorization: Protocols should verify user identity and restrict access to authorized personnel only.

3. Anomaly Detection and Response: Systems should detect and respond to potential cyber threats in real-time.

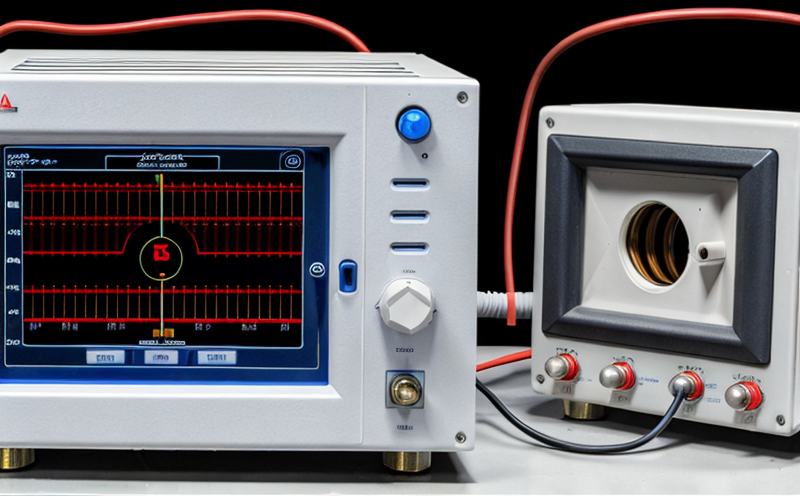

Standards for Testing, Validation, and Verification

Testing, validation, and verification (TVV) are essential components of military AI systems:

1. Simulation-based testing: Protocols should allow for simulation-based testing to validate system performance under various scenarios.

2. In-Service Testing: Standards must ensure that military AI systems undergo rigorous in-service testing and evaluation to identify potential issues.

QA Section

Q: What are the primary concerns regarding military AI systems?

A: The primary concerns include safety, effectiveness, and ethics, as well as issues related to autonomy, data quality, cybersecurity, and human oversight.

Q: How can standards for military AI systems be developed and implemented?

A: Various organizations, governments, and industries are working together to develop and implement standards. Examples include the NDAA, ISO, and industry-led initiatives.

Q: What are some key considerations in establishing standards for military AI systems?

A: Key considerations include autonomy and decision-making, data quality and integrity, algorithmic transparency, bias and fairness, human oversight and control, cybersecurity, testing, validation, and verification.

Q: How can the risks associated with military AI systems be mitigated?

A: Risks can be mitigated through rigorous standards development, implementation, and enforcement. This includes addressing issues related to autonomy, data quality, cybersecurity, and human oversight.

Q: What role do industry-led initiatives play in developing standards for military AI systems?

A: Industry-led initiatives are critical in driving the development of standards, as they bring expertise and resources to the process. Examples include collaborations between technology companies and government agencies.

Q: Can you provide more information on algorithmic transparency in military AI systems?

A: Algorithmic transparency is essential for ensuring that decision-making processes are explainable and accountable. This involves developing and implementing protocols for auditing, logging, and analyzing AI system decisions.

Q: What is the significance of bias and fairness in military AI systems?

A: Bias and fairness are critical considerations to prevent unintended consequences or discriminatory outcomes. Standards must address issues related to data quality and algorithmic decision-making.

Q: How can cybersecurity standards be integrated into military AI systems?

A: Cybersecurity standards should be integrated throughout the development, deployment, and operation of military AI systems. This includes measures for protecting sensitive data, authenticating users, and detecting anomalies.

Q: Can you explain the importance of human oversight and control in military AI systems?

A: Human oversight and control are essential to prevent unintended consequences or loss of control over autonomous systems. Protocols should define limits of autonomy and ensure that humans can intervene when necessary.

By developing and implementing standards for military AI systems, organizations, governments, and industries can mitigate risks associated with these technologies and ensure their safe, effective, and ethical use in military operations.

Lighting and Optical Device Testing

Lighting and Optical Device Testing: Ensuring Performance and Safety Lighting and optical devices a...

Food Safety and Testing

Food Safety and Testing: Ensuring the Quality of Our Food As consumers, we expect our food to be sa...

Product and Retail Standards

Product and Retail Standards: Ensuring Quality and Safety for Consumers In todays competitive marke...

Construction and Engineering Compliance

Construction and Engineering Compliance: Ensuring Safety, Quality, and Regulatory Adherence In the ...

Renewable Energy Testing and Standards

Renewable Energy Testing and Standards: Ensuring a Sustainable Future The world is rapidly transiti...

Electrical and Electromagnetic Testing

Electrical and Electromagnetic Testing: A Comprehensive Guide Introduction Electrical and electrom...

Healthcare and Medical Devices

The Evolution of Healthcare and Medical Devices: Trends, Innovations, and Challenges The healthcare...

Fire Safety and Prevention Standards

Fire Safety and Prevention Standards: Protecting Lives and Property Fire safety and prevention stan...

Transportation and Logistics Certification

Transportation and Logistics Certification: A Comprehensive Guide The transportation and logistics ...

Cosmetic Product Testing

The Complex World of Cosmetic Product Testing The cosmetics industry is a multi-billion-dollar ma...

NEBS and Telecommunication Standards

Network Equipment Building System (NEBS) and Telecommunication Standards The Network Equipment Bu...

Aviation and Aerospace Testing

Aviation and Aerospace Testing: Ensuring Safety and Efficiency The aviation and aerospace industr...

Pressure Vessels and Installations Testing

Pressure Vessels and Installations Testing Pressure vessels are a critical component of various ind...

Industrial Equipment Certification

Industrial equipment certification is a critical process that ensures industrial equipment meets spe...

Agricultural Equipment Certification

Agricultural equipment certification is a process that ensures agricultural machinery meets specific...

Chemical Safety and Certification

Chemical safety and certification are critical in ensuring the safe management of products and proce...

Trade and Government Regulations

Trade and government regulations play a vital role in shaping the global economy. These regulations ...

Environmental Simulation Testing

Environmental Simulation Testing: A Comprehensive Guide In todays world, where technology is rapidl...

Railway Industry Compliance

Railway Industry Compliance: Ensuring Safety and Efficiency The railway industry is a critical comp...

Energy and Sustainability Standards

In today’s rapidly evolving world, businesses face increasing pressure to meet global energy a...

MDR Testing and Compliance

MDR Testing and Compliance: A Comprehensive Guide The Medical Device Regulation (MDR) is a comprehe...

Military Equipment Standards

Military Equipment Standards: Ensuring Effectiveness and Safety The use of military equipment is a ...

Electromechanical Safety Certification

Electromechanical Safety Certification: Ensuring Compliance and Protecting Lives In todays intercon...

Hospitality and Tourism Certification

Hospitality and Tourism Certification: Unlocking Opportunities in the Industry The hospitality and ...

IT and Data Center Certification

IT and Data Center Certification: Understanding the Importance and Benefits The field of Informatio...

Environmental Impact Assessment

Environmental Impact Assessment: A Comprehensive Guide Environmental Impact Assessment (EIA) is a c...

Pharmaceutical Compliance

Pharmaceutical compliance refers to the adherence of pharmaceutical companies and organizations to l...

Battery Testing and Safety

Battery Testing and Safety: A Comprehensive Guide As technology continues to advance, battery-power...

Automotive Compliance and Certification

Automotive Compliance and Certification: Ensuring Safety and Efficiency The automotive industry is ...

Consumer Product Safety

Consumer Product Safety: Protecting Consumers from Harmful Products As a consumer, you have the rig...