-

IT and Data Center Certification-

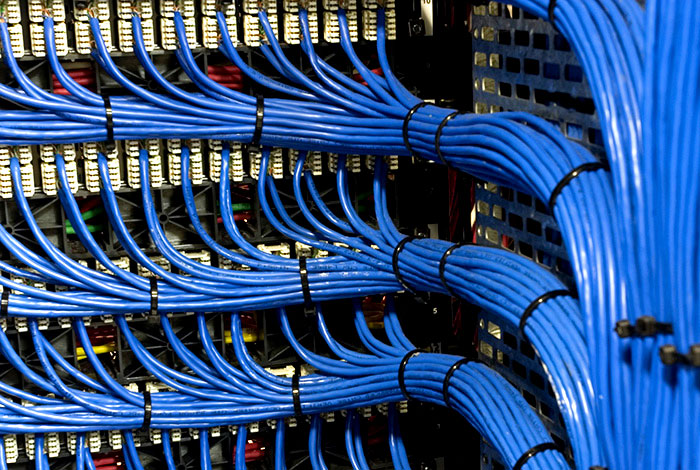

IT Infrastructure Testing for Data Centers-

Testing Data Center Backup and Archiving Systems for Reliability

We provide comprehensive solutions designed to help our clients mitigate risks, enhance performance, and excel in key areas such as quality, health & safety, environmental sustainability, and social responsibility.

Discover

For many years, our organization has been operating successfully, boasting modern laboratories that meet international standards. These laboratories are equipped with the latest technology devices and equipment, and we have built a strong team of experienced and trained personnel to operate them.

DiscoverWelcome to Eurolab, your partner in pioneering solutions that encompass every facet of life. We are committed to delivering comprehensive Assurance, Testing, Inspection, and Certification services, empowering our global clientele with the ultimate confidence in their products and processes.

Discover

-

IT and Data Center Certification-

IT Infrastructure Testing for Data Centers-

Testing Data Center Backup and Archiving Systems for ReliabilityTesting Data Center Backup and Archiving Systems for Reliability

In todays data-driven world, organizations rely heavily on their data center infrastructure to store and process vast amounts of data. The importance of ensuring that this critical infrastructure is reliable cannot be overstated. A single failure or data loss can have catastrophic consequences, including business disruption, financial losses, and reputational damage.

One of the key components of a reliable data center infrastructure is the backup and archiving system. These systems are designed to protect against data loss by creating copies of critical data and storing them safely for future use. However, simply installing these systems is not enough they must be thoroughly tested to ensure their reliability and effectiveness in the event of an actual disaster.

Testing Data Center Backup and Archiving Systems

When it comes to testing data center backup and archiving systems, there are several key considerations. First and foremost, its essential to understand that testing involves more than just running a few simple checks or verifying that the system is online. A comprehensive test program must be designed to simulate real-world scenarios, including failures, disasters, and human error.

Here are some of the key aspects of testing data center backup and archiving systems:

Simulation Testing: This type of testing involves simulating actual disaster scenarios to test the reliability of the backup and archiving system. For example, a test might involve simulating a fire or flood in the data center, followed by a manual failover to an alternate site.

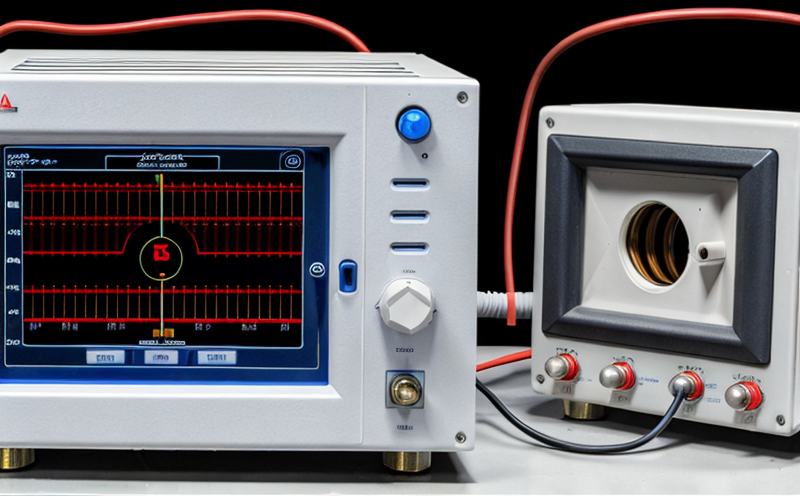

Recovery Time Objective (RTO) Testing: RTO is the maximum time allowed for data recovery after a disaster. To test this, the backup and archiving system must be triggered to restore data from a backup copy. The time taken to complete this process should be measured and compared against the RTO specified in the organizations disaster recovery plan.

Data Integrity Testing: This involves verifying that backups are accurate and can be restored with no loss of data or corruption. To test this, backups can be intentionally corrupted or modified, and then restored from the backup copy to ensure that no errors occur during the restoration process.

Alternate Site Recovery (ASR) Testing: ASR is a critical component of disaster recovery planning, as it involves recovering data from an alternate site in case the primary site becomes unavailable. To test this, the backup and archiving system must be triggered to restore data from the alternate site, followed by verification that the restored data is accurate and complete.

Restoring Data from Backups

Restoring data from backups can be a complex process, especially when it comes to large datasets or high-availability systems. To ensure that this process can be completed successfully, several key factors must be considered:

Backup Data Sets: The backup system should create separate backup data sets for different types of data, such as database files, virtual machine images, and file shares. This allows administrators to selectively restore only the data that is needed.

Data Compression and Encryption: To reduce storage costs and improve security, many organizations compress or encrypt their backups. However, this can add complexity to the restoration process. Administrators must ensure that they have the necessary software and keys to decompress or decrypt backups.

Versioning and Retention: The backup system should support versioning, which allows administrators to restore data from previous versions in case of corruption or overwrite errors. Additionally, a retention policy should be implemented to ensure that old backups are not deleted prematurely.

Common Challenges

While testing data center backup and archiving systems is crucial for ensuring reliability, several common challenges must be addressed:

Resource Constraints: Testing often requires significant resources, including hardware, software, and personnel. Organizations may struggle to allocate sufficient resources for comprehensive testing.

Complexity of Systems: Modern data centers are increasingly complex, with many different components and technologies involved. This can make it difficult to design effective test scenarios and analyze results.

Lack of Testing Frequency: All too often, organizations fail to conduct regular testing of their backup and archiving systems. This can lead to a false sense of security and neglect critical vulnerabilities in the system.

Best Practices

To ensure that data center backup and archiving systems are reliable and effective, several best practices should be followed:

Regular Testing: Schedule regular testing to ensure that systems are functioning as intended.

Comprehensive Test Scenarios: Design test scenarios that simulate real-world failures and disasters.

Collaboration with IT Teams: Involve all relevant IT teams in the testing process to ensure that everyone understands their roles and responsibilities.

Continuous Monitoring: Continuously monitor systems for vulnerabilities and address any issues promptly.

Conclusion

Testing data center backup and archiving systems is a critical component of disaster recovery planning. By simulating real-world scenarios, verifying RTOs, checking data integrity, and testing ASR capabilities, organizations can ensure that their backup and archiving systems are reliable and effective. However, several common challenges must be addressed to ensure the success of these efforts.

QA Section

Q: What is the difference between a backup system and an archiving system?

A: A backup system creates copies of data for disaster recovery purposes, while an archiving system stores infrequently accessed or historical data. While both systems are used to protect data, they serve different purposes and have distinct requirements.

Q: How often should I test my backup and archiving systems?

A: Testing frequency will depend on various factors, including the size of your organization, the complexity of your systems, and the risk tolerance of your management. As a general rule, testing should be performed at least quarterly, with more frequent testing recommended for high-risk or critical systems.

Q: What are some common mistakes when testing data center backup and archiving systems?

A: Common mistakes include failing to simulate real-world failures, neglecting to test RTOs, and not checking data integrity. Additionally, not involving all relevant IT teams in the testing process can lead to misunderstandings or miscommunication.

Q: How do I choose between cloud-based and on-premises backup and archiving systems?

A: The choice between cloud-based and on-premises systems depends on your organizations specific needs and requirements. Consider factors such as data size, regulatory compliance, security concerns, and budget constraints when making a decision.

Q: What is the role of a Disaster Recovery Plan in testing data center backup and archiving systems?

A: A Disaster Recovery Plan outlines the procedures for responding to disasters or failures. It serves as a guide for testing data center backup and archiving systems, ensuring that all critical components are tested and validated according to the plans requirements.

Q: Can I use automated tools to test my backup and archiving systems?

A: Yes, various automated tools can be used to simplify the testing process. However, these tools should not replace manual testing entirely, as they may not simulate real-world failures or identify all vulnerabilities.

Q: What is the importance of Versioning in data center backup and archiving systems?

A: Versioning allows administrators to restore data from previous versions in case of corruption or overwrite errors. This ensures that critical data can be recovered even if the latest backups are corrupted or incomplete.

Q: Can I test my backup and archiving systems with a single large dataset, or should I use smaller datasets for testing?

A: It is recommended to use a combination of both small and large datasets for testing. Smaller datasets allow administrators to quickly identify issues, while larger datasets provide more realistic results that can be used to inform disaster recovery planning.

Q: How do I know if my backup and archiving systems are reliable enough for high-availability applications?

A: Consider using tools such as RTO testers or data integrity checkers to measure the reliability of your backup and archiving systems. Additionally, perform regular testing with simulated failures and disasters to verify that systems can recover quickly and accurately.

Q: Can I skip testing my backup and archiving systems if they have been certified by a third-party vendor?

A: No, even if a system has been certified by a third-party vendor, it is still essential to perform regular testing to ensure that the system meets your organizations specific requirements and disaster recovery plan.

Hospitality and Tourism Certification

Hospitality and Tourism Certification: Unlocking Opportunities in the Industry The hospitality and ...

Aviation and Aerospace Testing

Aviation and Aerospace Testing: Ensuring Safety and Efficiency The aviation and aerospace industr...

IT and Data Center Certification

IT and Data Center Certification: Understanding the Importance and Benefits The field of Informatio...

Military Equipment Standards

Military Equipment Standards: Ensuring Effectiveness and Safety The use of military equipment is a ...

Healthcare and Medical Devices

The Evolution of Healthcare and Medical Devices: Trends, Innovations, and Challenges The healthcare...

Cosmetic Product Testing

The Complex World of Cosmetic Product Testing The cosmetics industry is a multi-billion-dollar ma...

Environmental Simulation Testing

Environmental Simulation Testing: A Comprehensive Guide In todays world, where technology is rapidl...

Automotive Compliance and Certification

Automotive Compliance and Certification: Ensuring Safety and Efficiency The automotive industry is ...

Industrial Equipment Certification

Industrial equipment certification is a critical process that ensures industrial equipment meets spe...

Trade and Government Regulations

Trade and government regulations play a vital role in shaping the global economy. These regulations ...

Transportation and Logistics Certification

Transportation and Logistics Certification: A Comprehensive Guide The transportation and logistics ...

NEBS and Telecommunication Standards

Network Equipment Building System (NEBS) and Telecommunication Standards The Network Equipment Bu...

Consumer Product Safety

Consumer Product Safety: Protecting Consumers from Harmful Products As a consumer, you have the rig...

Railway Industry Compliance

Railway Industry Compliance: Ensuring Safety and Efficiency The railway industry is a critical comp...

Fire Safety and Prevention Standards

Fire Safety and Prevention Standards: Protecting Lives and Property Fire safety and prevention stan...

Food Safety and Testing

Food Safety and Testing: Ensuring the Quality of Our Food As consumers, we expect our food to be sa...

Agricultural Equipment Certification

Agricultural equipment certification is a process that ensures agricultural machinery meets specific...

MDR Testing and Compliance

MDR Testing and Compliance: A Comprehensive Guide The Medical Device Regulation (MDR) is a comprehe...

Environmental Impact Assessment

Environmental Impact Assessment: A Comprehensive Guide Environmental Impact Assessment (EIA) is a c...

Chemical Safety and Certification

Chemical safety and certification are critical in ensuring the safe management of products and proce...

Electromechanical Safety Certification

Electromechanical Safety Certification: Ensuring Compliance and Protecting Lives In todays intercon...

Pharmaceutical Compliance

Pharmaceutical compliance refers to the adherence of pharmaceutical companies and organizations to l...

Construction and Engineering Compliance

Construction and Engineering Compliance: Ensuring Safety, Quality, and Regulatory Adherence In the ...

Battery Testing and Safety

Battery Testing and Safety: A Comprehensive Guide As technology continues to advance, battery-power...

Product and Retail Standards

Product and Retail Standards: Ensuring Quality and Safety for Consumers In todays competitive marke...

Electrical and Electromagnetic Testing

Electrical and Electromagnetic Testing: A Comprehensive Guide Introduction Electrical and electrom...

Pressure Vessels and Installations Testing

Pressure Vessels and Installations Testing Pressure vessels are a critical component of various ind...

Renewable Energy Testing and Standards

Renewable Energy Testing and Standards: Ensuring a Sustainable Future The world is rapidly transiti...

Energy and Sustainability Standards

In today’s rapidly evolving world, businesses face increasing pressure to meet global energy a...

Lighting and Optical Device Testing

Lighting and Optical Device Testing: Ensuring Performance and Safety Lighting and optical devices a...